Monday, July 18, 2005

Saturday, July 16, 2005

Churches and Cathedrals

What a week!

After the Demo on Tuesday my whole team was pretty tapped. We went to the Church Brew Works to recover... What better way than enjoying a beer at church : ) After the couple talks on Wednesday we met up with professor Yang Cai at his lab at CMU. One of his students showed us around the campus (very nice!). The buildings were extremely old and well kept, and the receptionist in the CS building was a roboceptionist, a good mix of old and new. We got to play with the lab's eye tracking system (see the picture in Flckr). If you look at a spot on the screen for 5 seconds or longer, that spot gets selected.

We headed over to the Cathedral of Learning to find 42 stories of classrooms and lounges for students to study in etc. After spending some time taking pictures of the main entrance, we headed inside to find a gorgeous foyer that required more photo-taking time (thank God for digital). We finally headed up the network of elevators that you have to take to get to the top, each one only went up 10 floors or so. Once at the top we stumbled into a group of U Pitt students in what must have been a student lounge. I overheard them talking about their experiences interning at the hospital and noticed that one of the guys had his shirt off... "Very odd" I thought, "Where's the gym?" I didn't ask of course, but after checking out the view from the top of Pittsburgh, we headed down and met a nice woman in the elevator that explained the shirtless guy... he had climbed the 42 stories of stairs for exercise.

Looking back at the week, I couldn't have asked for a better first AAAI. I met a whole community of people with similar interests and got to see a glimpse of a beautiful city and two universities.

Thanks to everyone who helped make AAAI a success!

What a week!

After the Demo on Tuesday my whole team was pretty tapped. We went to the Church Brew Works to recover... What better way than enjoying a beer at church : ) After the couple talks on Wednesday we met up with professor Yang Cai at his lab at CMU. One of his students showed us around the campus (very nice!). The buildings were extremely old and well kept, and the receptionist in the CS building was a roboceptionist, a good mix of old and new. We got to play with the lab's eye tracking system (see the picture in Flckr). If you look at a spot on the screen for 5 seconds or longer, that spot gets selected.

We headed over to the Cathedral of Learning to find 42 stories of classrooms and lounges for students to study in etc. After spending some time taking pictures of the main entrance, we headed inside to find a gorgeous foyer that required more photo-taking time (thank God for digital). We finally headed up the network of elevators that you have to take to get to the top, each one only went up 10 floors or so. Once at the top we stumbled into a group of U Pitt students in what must have been a student lounge. I overheard them talking about their experiences interning at the hospital and noticed that one of the guys had his shirt off... "Very odd" I thought, "Where's the gym?" I didn't ask of course, but after checking out the view from the top of Pittsburgh, we headed down and met a nice woman in the elevator that explained the shirtless guy... he had climbed the 42 stories of stairs for exercise.

Looking back at the week, I couldn't have asked for a better first AAAI. I met a whole community of people with similar interests and got to see a glimpse of a beautiful city and two universities.

Thanks to everyone who helped make AAAI a success!

Thursday, July 14, 2005

Wednesday, July 13, 2005

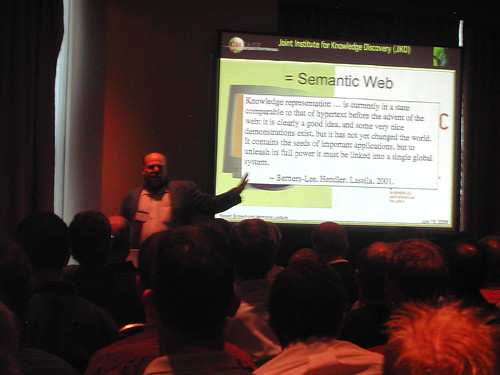

Jim Hendler: knowledge is power

Jim Hendler’s presentation on the semantic web is fully attended as the “Web 2.0” talk given by Tanenbaum. He used lots of demos, e.g. RDF in PDF, swoop, and swoogle, to justify the practical aspect of the semantic web -- “You are here”. The simple semantic web is less expressive than any existing KR languages; however it does have significant amount of knowledge (million of documents and thousands of ontologies).

Jim Hendler’s presentation on the semantic web is fully attended as the “Web 2.0” talk given by Tanenbaum. He used lots of demos, e.g. RDF in PDF, swoop, and swoogle, to justify the practical aspect of the semantic web -- “You are here”. The simple semantic web is less expressive than any existing KR languages; however it does have significant amount of knowledge (million of documents and thousands of ontologies).

Participating in an experiment

Tuesday, July 12, 2005

Google's conference break game

A Little Story

I'll give a personal example to illustrate. Late Monday evening I was playing some jazz improvisations on the Westin hotel's lovely concert grand piano. After I finished, I had a chance to meet with one of the AAAI invited speakers who shared his interest in jazz performance and we briefly discussed some of our music related research and projects. All went well and I felt energized after such a stimulating day of conversations with such fascinating people. I meandered up to the next floor using the escalator and then realized that I needed to take the elevator to return to my hotel room. I pushed the elevator call button and when the door opened, discovered that the same invited speaker was already in the elevator. Now, this is where my brain's attempt to use a stairwell (or perhaps a hallway) conversation rule failed rather spectacularly (at least in terms of the conversation's success).

Fortunately it was a temporal, not a spatial rule which was used incorrectly. Basically, what happened was that I didn't take into account the sharply defined time constraint imposed by the elevator itself, and when the invited speaker politely mentioned one of my projects, I launched into a series of statements about the project, probably due to my excitement about the subject. Unfortunately for me, the elevator abruptly "dinged", door opened, and speaker exited, saying a terse "good night", leaving me in a rather awkward state. Some questions: If the main actor was a robot, would it detect this conversation failure? Could it learn from the mistake? (I hope I myself will!) Could a robot create a blog, or a narrative describing an incident that it experienced? What would an intelligent robot do if it entered an elevator with two invited speakers, one speaker a robot and the other human (and presumably the conversation rules / protocol would be different for each?) For example, if it decided to converse with the robot speaker, would it use natural language so as not to alienate the human speaker? Or maybe it would have a wireless, data based conversation with the robot and a simultaneous natural language conversation with the human speaker. (But the time constraint might not apply to the wireless mode and perhaps the two robots would not determine their conversation patterns by locality: robots might be connected to an intra robot communications network which determines conversation patterns in other ways - I mentioned this to Caroline and it made her think of something from Jungian psychology.)

Anyways, enough rambling for now!

Monday, July 11, 2005

So, What's AI Research, Anyways?

On the opening day of ASAMAS, Gal Kaminka of Bar Ilan University gave an Introduction to Agents and Multiagent Systems. At the beginning of his speech, he mentioned an incident when his paper was rejected at an Agents conference because one of the reviewers thought that the research presented was not related to Agents. Gal was quite annoyed about that for a couple of weeks. That's quite expected; anyone would be annoyed if a peer-reviewer decides that you are not doing what you think you are doing. But then later Gal also talked about meeting a researcher at Bar Ilan who makes the best batteries and how according to him the battery maker was also a robotics researcher.

That got me thinking: Where is the line between AI Research and Non-AI Research? Does Machine Vision or Robotic Arm Design count as AI research?? Well, I think many would say so. But then what about the batteries and motors used in robots?? Is that AI research? If it is, then what about the chemicals used in batteries, which can be used in a robot? Is THAT AI research? How far do we go? Where do we draw the line?

[summary] sister conference highlights (monday morning)

The sister conference session is a convenient path to access relevant and interesting researches in the conferences you have missed in the past. I'm a little bit surprised that this session is not well attended.

KDD 04: Tutorials reflects current interests in Data Mining: date steam, time series, data quality and data cleaning, junk mail filtering, and graph analysis. This conference has an algorithmic and practical flavor, e.g. clustering is a more concrete form of "levels of abstraction". There are also many industrial participations and KDD cup went well.

ICAPS 05: This is a fairly young conference which merges several previous conferences on planning and scheduling. Scheduling papers increase(25%), and search continues play prominent role. Best paper presents a "complete and optimal" and "memory-bounded" beam search. Another interesting paper learns action model from plan traces without the need of manually annotating intermediate nodes in the trace. The competition on knowledge engineering another way to attract participants from various relevant domains. Interesting agreements from participants "application get no respect", "too many people spend too much time working on meaningless theories".

UAI 04: Probability and graphical representation dominate these years. Tutorials were clustered on graphical models (esp. BBN). Best paper presents case-factored diagram, a new representation of structure statistical models, so as to offer compact representation, as demonstrated in the problem of "finding the most likely parse of a given sentence".

Sunday, July 10, 2005

Agents Don't Need to be "Super Intelligent" to be Helpful

Sherbrooke's Spartacus robot is actually the only robot at this conference who is attempting the daunting task of competing in the Robot Challenge. Tomorrow morning, Spartacus will be dropped off at the entrance to the hotel, and will have to somehow take the elevator up to the correct registration floor, find the right registration desk, and after registration perform volunteer duties (in lieu of paying the conference fee) until it is time for his scheduled presentation time, at which he will present his latest work and answer questions from the audience. Not only that, but Spartacus will also interact and socialize with the other conference participants throughout. I wish him (it?) and the Sherbrooke team the best of luck!

Regarding robots, I am by no means an expert, or even remotely involved in that area myself, but I can easily envision a day when we will walk along a city street, no longer taking special notice of the additional pedestrian traffic: autonomous robots who will scurry about their daily business just as we humans do today. It shouldn't be too difficult to gain mass acceptance of these types of robots once they have been interviewed on TV, and come across as friendly, helpful, and even funny (maybe I'm going out on a limb here, but just wait 20 years and you'll see...)

In the afternoon I attended Mark T. Maybury's tutorial session on Intelligent User Interfaces. I can imagine that some of the attendees of this tutorial may have been put off by the somewhat dated video examples (for example there were a few from the ever so ancient time of 1990 to 1995), but I believe (and Dr. Maybury stated) that the differences between the concepts and ideas illustrated by those videos and the state of the art today are largely cosmetic. For example, it seemed that a huge part of Intelligent User Interfaces involves multimodal input, where a user would simultaneously gesture, look at an object, and speak, and these inputs would be synthesized and used as a basis for decision making, learning, or executing a task. This is obviously a problem that has not been solved completely today, even though over 10 years have passed since the celebration of first successes.

Dr. Maybury presented so many great ideas, some in more detail than others, but one idea which I was especially interested in (and which he generously expanded upon) is the concept of a software agent which identifies human experts within an organization by capturing and searching for keywords in the publicly available writings of the employees (e.g. if employees publish documents to a company repository, they can be considered to be fair game for keyword searching).

You might say that this system is not really that intelligent, but Maybury argued that this doesn't really matter - it can still be really helpful. (My example follows.) Let's say that company A has 2000 employees in 25 locations throughout the globe. What usually happens is that, without this new software agent system, if an employee needs to gain knowledge on a certain topic, he/she might consult the immediate social network to determine an expert, such by asking coworkers on the same floor, or perhaps someone in the same office who is a hub in the company's social network. (That's why I think that even in this day and age when telecommuting is possible, most large software firms still have (large) brick and mortar offices.) However, with a software agent that can identify experts throughout an organization regardless of location, these social networks are no longer required to find experts. (Much like how web search is reducing the need for personal referrals to small service based companies).

In the future I really don't see how large companies could afford not to employ such an expert finding system (...and maybe some already have, but are just not telling.) Dr. Maybury mentioned that his organization (MITRE) did publish a paper on this idea before any patents were filed, so there is potentially still an opportunity for some newcomers to jump in with a fancy new product to serve this purpose. He gave one commercial example of Tacit.com which attempts to build the expert database using employee email monitoring. On a side note, just imagine what kind of "expert database" Google could have of the world, if they mined their Gmail archives 10 years from now! (Not that they would ever do that of course without our consent, but what if users requested that feature?!)

I have networked, and it was good.

Saturday, July 09, 2005

AAAI Doctor consortium summary (day one)

|

|

Jennifer Neville (UMass) -- Structure Learning for Statistical Relational Models

Motivation: latent groups could be detected from graph structure as well as node attributes. Subjects: graph analysis, clustering Issues: (i) Partitioning a network into groups using both link structure analysis and node properties clustering (using EM); (ii) utilizing group assignment for better attribute-value predication and unbiased feature selection. Comments: Groups, as mentioned in this talk, are disjoint; however, further work might involve instances where a node may belong to multiple groups. This problem can be viewed as a clustering problem which uses features from node attributes as well as graph structure information.

Shimon Whiteson (UTexas Austin) --Improving Reinforcement Learning Function Approximators via Neuroevolution Motivation: adaptive scheduling policy can be learned using neuroevolution. Subjects: function approximation, reinforcement learning, evolutionary neural network. Issues: (i) the transition matrix (state-action table) can be huge, and a neural network (NN) based Function Approximator (FA) is a compact alternative; (ii) NEAT+Q, which evolves both the weights and the topology of the NN using NEAT and Q learning, is helpful. Experiments show that Darwinian evolution achieves the best performance compared to Lamarkian evolution, randomized strategies, etc. Comments: Many are skeptical about whether evolving NN could be used for online scheduling since it takes a lot of computational time. In order to evaluate the significance of improvement, the optimal and worse cases are needed.

Bhaskara Marthi (UC Berkeley) -- Discourse Factors in Multi-Document Summarization Motivation: Decomposition is needed for planning asynchronous tasks and many joint choices in a giant state space. Subjects: planning, reinforcement learning, optimization Issues: (i) concurrent ALisp and coordination graphs are used for concurrent hierarchical task decomposition; (ii) decompose Q function w.r.t. subroutines, and that requires reward decomposition which is hard. Comments: Decomposing Q function for the reward of "exiting a subroutine" is hard since there could be thousands of ways to exit.

Trey Smith (CMU) --Rover Science Autonomy: Probabilistic Planning for Science-Aware Exploration Motivation: Discover scientifically interesting objects in extreme environments (e.g., Mars). Subjects: Planning Issues: (i) planning navigation -- with maximum coverage over a spatial extent; (ii) selective sampling — variety is preferred to large amount of copies of samples.

Marie desJardins (mentor from UMBC) Prepare talks for different context:

- Job talk. Try to amuse people with your work and how complex it is.

- Doctor consortium talk. Present technical details, expose your strength and weakness in front of the mentors, they are external experts who will provide valuable comments from all aspects. Take it as your trial thesis defense...

- Conference talk. Present ideas and keep the audience awake...

Snehal Thakkar (USC) -- Planning for Geospatial Data Integration Motivation: Integrate spatial-related data on the Web, and support query/answering using planning. Subject: Information integration, Geospatial information system Issues: (i) a hierarchical ontology/taxonomy for modeling the spatial application domain, (ii) Planning spatial information query using filtering (reduce querying many irrelevant sources).

Ozgur Simsek (UMass) -- Towards Competence in Autonomous Agents Motivation: Define “useful skills”, and let agents learn them. Subjects: Learning, knowledge discovery Issues: "useful skills" is defined in three categories: (1) access skills, identify "access states" which are critical for making the search space fully searchable especially for hard-to-access regions; (2) approach-discovery skills, how to achieve an "access state"; and (3) causal discovery skill, identify casual relations. Comments: How to memorize the past states is still hard when the problem space scales. Experiences can be reused as well as the skill can be reused.

Mykel J. Kochenderfer (U Edinburgh) -- Adaptive Modeling and Planning for Reactive Agents Motivation: Efficient planning in real time for complex problems with large state and action spaces requires partitioning these spaces into a manageable number of regions. Subjects: reinforcement learning, clustering Issues: Learn to partition the state and action spaces using online split and merge operations. Comments: This could be viewed as a incremental clustering problem such that nodes in trajectories are sample points for generating clusters and thus induce partition of state and action space.

Vincent Conitzer (CMU) -- Computational Aspects of Mechanism Design Motivation: reach optimal outcome by aggregating personal preferences. Subjects: Information aggregation, game theory, multi-item optimization Issues: To achieve optimal outcome for multiple preferences, we can use automated aggregation mechanisms (e.g. vote and auction) and bound agents' behavior. VCG auction encourages users showing true preferences (prevent lying).

Flat Beer

Wow, what a long day! We arrived last night from

For those of you not familiar with NLP there are three basic approaches to analyzing data. The knowledge-based approach uses knowledge like dictionaries to help find meaning in raw data. Supervised approaches use human annotated data in conjunction with dictionaries while unsupervised approaches use neither a dictionary nor annotated data, but rather look at the raw text to find similarity between contexts (this is indeed real intelligence).

After the tutorials we mingled in the lobby trying to set up wireless access (which works much better now). I found this guy with the most appalling t-shirt. It turned out that he was part of a big group of people from UMBC. They seemed to be more in touch with the

Until tomorrow,

Caroline

The Web as a Collective Mind

What I really liked about Mihalcea and Pedersen's talk is that they took the time to put together lists of resources for aspiring researchers in this field, including several freely available algorithm implementations such as SenseTools, SenseRelate, SenseLearner, and Unsupervised SenseClusters.

In the afternoon I attended Tuomas Sandholm's tutorial session on Market Clearing Algorithms, and I found his topic frankly quite fascinating!! One area he discussed was mechanism design for multi-item auctions, which are for "multiple distinguishable items when bidders have preferences over combinations of items: complementarity and substitutability". Some examples he gave of these type of auctions are in transportation, where a trucker would be willing to accept a lower rate if he/she wins the contract to transport goods both to and from a destination (as opposed to just one way). On the way to our hotel I observed that our taxi was equipped with a fairly sophisticated wireless computer system, and I thought how these type of auctions could also be relevant to taxi fare determination.

In the afternoon I attended Tuomas Sandholm's tutorial session on Market Clearing Algorithms, and I found his topic frankly quite fascinating!! One area he discussed was mechanism design for multi-item auctions, which are for "multiple distinguishable items when bidders have preferences over combinations of items: complementarity and substitutability". Some examples he gave of these type of auctions are in transportation, where a trucker would be willing to accept a lower rate if he/she wins the contract to transport goods both to and from a destination (as opposed to just one way). On the way to our hotel I observed that our taxi was equipped with a fairly sophisticated wireless computer system, and I thought how these type of auctions could also be relevant to taxi fare determination.

Other interesting points which Tuomas discussed involved the game theory of auctions, and problems such as a single agent using pseudonyms to pose as multiple agents, and collusion between agents. Now that many auctions are occurring virtually, preventing these problems becomes more difficult. Another set of ideas deal with the concept of an "elicitor" which facilitates the auction by "deciding what to ask from which bidder". Interestingly enough, with an elicitor there is an incentive for answering truthfully as long as all the other agents are also answering truthfully.

Howdy Bloggers

I'm Mark Carman. I come from Adelaide, Australia. I'm doing a Ph.D. in Trento, Italy. And I'm currently living and working in Los Angeles, California. Confusing hey.

I'm in the doctoral consortium at AAAI and haven't introduced myself till now, because I just got back from a honeymoon in the south of Italy....

My PhD work is on learning source definitions for use in composing web services. If you want to know what that means, come up to at the conference and I'll be happy to tell you all about it.

Today I gave a talk at the Workshop on Planning for Grid and Web Services. - I think people actually understood what I was talking about, so I was quite happy with how it went.

For the rest of the day I've been listening to the presentations at the doctoral consortium. The talks were quite interesting. Seems like Reinforcement Learning is in fashion this year - four of the talks were somehow related to it!

Below are a couple of photos I took: Note the size of the screen - I think it is the biggest I've ever seen!

I'm Mark Carman. I come from Adelaide, Australia. I'm doing a Ph.D. in Trento, Italy. And I'm currently living and working in Los Angeles, California. Confusing hey.

I'm in the doctoral consortium at AAAI and haven't introduced myself till now, because I just got back from a honeymoon in the south of Italy....

My PhD work is on learning source definitions for use in composing web services. If you want to know what that means, come up to at the conference and I'll be happy to tell you all about it.

Today I gave a talk at the Workshop on Planning for Grid and Web Services. - I think people actually understood what I was talking about, so I was quite happy with how it went.

For the rest of the day I've been listening to the presentations at the doctoral consortium. The talks were quite interesting. Seems like Reinforcement Learning is in fashion this year - four of the talks were somehow related to it!

Below are a couple of photos I took: Note the size of the screen - I think it is the biggest I've ever seen!

We're off for dinner at Sonoma Grill now, so I'll have to post comments on the talks later.....

We're off for dinner at Sonoma Grill now, so I'll have to post comments on the talks later.....

Thursday, July 07, 2005

Hello [AAAI] World! (from Li Ding)

I am Li DING, a 4th year PhD student advised by Tim Finin from the eBiquity group at UMBC . I am originally from Beijing, China. My research focus is on representing and sharing knowledge using semantic web technologies.

I maintain the Swoogle search engine for semantic web data.

I have a FOAF file with a list of my friends, and AAAI 05 is surely a great place for augmenting this list. See you all there!

I am Li DING, a 4th year PhD student advised by Tim Finin from the eBiquity group at UMBC . I am originally from Beijing, China. My research focus is on representing and sharing knowledge using semantic web technologies.

I maintain the Swoogle search engine for semantic web data.

I have a FOAF file with a list of my friends, and AAAI 05 is surely a great place for augmenting this list. See you all there!

Tuesday, July 05, 2005

Hello from Caroline

Hello from Jake

Sunday, July 03, 2005

Hello from Geoff

Hi everyone! I'm an undergrad student from Simon Fraser University in computing science and business. I'm really looking forward to participating in this conference, both as a student volunteer blogger, and a presenter at the Intelligent Systems Demonstrations that are happening on Tuesday evening (July 12th). As a musician, I'm especially interested in the application of Artificial Intelligence to music, and our group's demo, Song Search by Tapping, reflects that. Our supervisor at SFU is Dr. Diana Cukierman.

Hi everyone! I'm an undergrad student from Simon Fraser University in computing science and business. I'm really looking forward to participating in this conference, both as a student volunteer blogger, and a presenter at the Intelligent Systems Demonstrations that are happening on Tuesday evening (July 12th). As a musician, I'm especially interested in the application of Artificial Intelligence to music, and our group's demo, Song Search by Tapping, reflects that. Our supervisor at SFU is Dr. Diana Cukierman.

I'm also really looking forward to attending the tutorial sessions on Saturday and Sunday of the conference (July 9th & 10th). Word sense disambiguation is an area I am interested in, especially applied to natural language of web pages. I am also going to attend sessions on Market Clearing Algorithms, Empirical Methods for Artificial Intelligence, and Intelligent User Interfaces.

I'm honoured to be part of this blogging group, among those with such diverse and fascinating interests. I look forward to meeting you all!

On a different note, does anyone know of some good places to check out for live jazz in Pittsburgh?